|

| By Laurent Itti | itti@usc.edu | http://jevois.org | GPL v3 |

| |||

| Video Mapping: YUYV 176 194 120.0 YUYV 176 144 120.0 JeVois FirstVision | |||

| Video Mapping: YUYV 352 194 120.0 YUYV 176 144 120.0 JeVois FirstVision | |||

| Video Mapping: YUYV 320 290 60.0 YUYV 320 240 60.0 JeVois FirstVision | |||

| Video Mapping: YUYV 640 290 60.0 YUYV 320 240 60.0 JeVois FirstVision | |||

| Video Mapping: NONE 0 0 0.0 YUYV 320 240 60.0 JeVois FirstVision | |||

| Video Mapping: NONE 0 0 0.0 YUYV 176 144 120.0 JeVois FirstVision |

Module Documentation

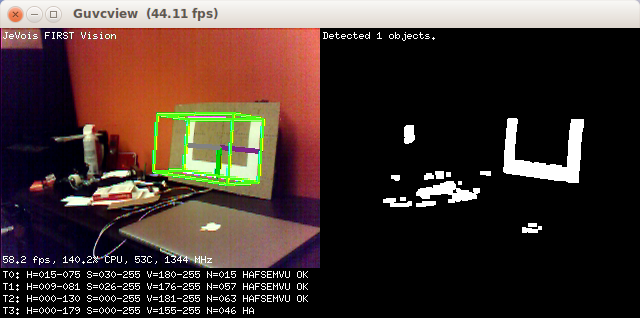

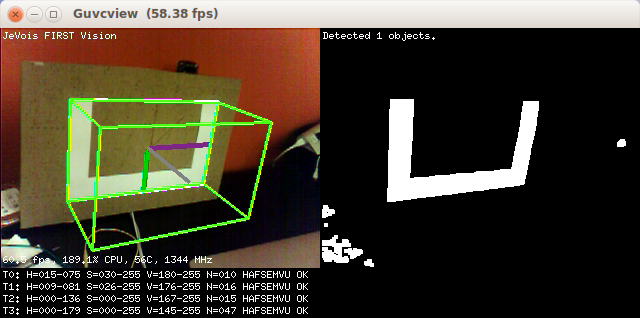

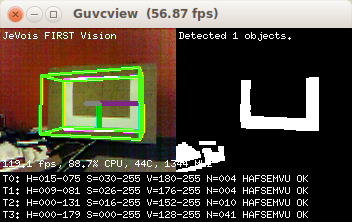

This module isolates pixels within a given HSV range (hue, saturation, and value of color pixels), does some cleanups, and extracts object contours. It is looking for a rectangular U shape of a specific size (set by parameter objsize). See screenshots for an example of shape. It sends information about detected objects over serial.

This module usually works best with the camera sensor set to manual exposure, manual gain, manual color balance, etc so that HSV color values are reliable. See the file script.cfg file in this module's directory for an example of how to set the camera settings each time this module is loaded.

This code was loosely inspired by the JeVois ObjectTracker module. Also see FirstPython for a simplified version of this module, written in Python.

This module is provided for inspiration. It has no pretension of actually solving the FIRST Robotics vision problem in a complete and reliable way. It is released in the hope that FRC teams will try it out and get inspired to develop something much better for their own robot.

General pipeline

The basic idea of this module is the classic FIRST robotics vision pipeline: first, select a range of pixels in HSV color pixel space likely to include the object. Then, detect contours of all blobs in range. Then apply some tests on the shape of the detected blobs, their size, fill ratio (ratio of object area compared to its convex hull's area), etc. Finally, estimate the location and pose of the object in the world.

In this module, we run up to 4 pipelines in parallel, using different settings for the range of HSV pixels considered:

- Pipeline 0 uses the HSV values provided by user parameters;

- Pipeline 1 broadens that fixed range a bit;

- Pipelines 2-3 use a narrow and broader learned HSV window over time.

Detections from all 4 pipelines are considered for overlap and quality (raggedness of their outlines), and only the cleanest of several overlapping detections is preserved. From those cleanest detections, pipelines 2-3 learn and adapt the HSV range for future video frames.

Using this module

Check out this tutorial.

Detection and quality control steps

The following messages appear for each of the 4 pipelines, at the bottom of the demo video, to help users figure out why their object may not be detected:

- T0 to T3: thread (pipeline) number

- H=..., S=..., V=...: HSV range considered by that thread

- N=...: number of raw blobs detected in that range

- Because N blobs are considered in each thread from this point on, information about only the one that progressed the farthest through a series of tests is shown. One letter is added each time a test is passed:

- H: the convex hull of the blob is quadrilateral (4 vertices)

- A: hull area is within range specified by parameter

hullarea - F: object to hull fill ratio is below the limit set by parameter

hullfill(i.e., object is not a solid, filled quadrilateral shape) - S: the object has 8 vertices after shape smoothing to eliminate small shape defects (a U shape is indeed expected to have 8 vertices).

- E: The shape discrepency between the original shape and the smoothed shape is acceptable per parameter

ethresh, i.e., the original contour did not have a lot of defects. - M: the shape is not too close to the borders of the image, per parameter

margin, i.e., it is unlikely to be truncated as the object partially exits the camera's field of view. - V: Vectors describing the shape as it related to its convex hull are non-zero, i.e., the centroid of the shape is not exactly coincident with the centroid of its convex hull, as we would expect for a U shape.

- U: the shape is roughly upright; upside-down U shapes are rejected as likely spurious.

- OK: this thread detected at least one shape that passed all the tests.

The black and white picture at right shows the pixels in HSV range for the thread determined by parameter showthread (with value 0 by default).

Serial Messages

This module can send standardized serial messages as described in Standardized serial messages formatting. One message is issued on every video frame for each detected and good object. The id field in the messages simply is FIRST for all messages.

When dopose is turned on, 3D messages will be sent, otherwise 2D messages.

2D messages when dopose is off:

- Serial message type: 2D

id: alwaysFIRSTx,y, or vertices: standardized 2D coordinates of object center or cornersw,h: standardized marker sizeextra: none (empty string)

3D messages when dopose is on:

- Serial message type: 3D

id: alwaysFIRSTx,y,z, or vertices: 3D coordinates in millimeters of object center, or cornersw,h,d: object size in millimeters, a depth of 1mm is always usedextra: none (empty string)

NOTE: 3D pose estimation from low-resolution 176x144 images at 120fps can be quite noisy. Make sure you tune your HSV ranges very well if you want to operate at 120fps (see below). To operate more reliably at very low resolutions, one may want to improve this module by adding subpixel shape refinement and tracking across frames.

See Standardized serial messages formatting for more on standardized serial messages, and Helper functions to convert coordinates from camera resolution to standardized for more info on standardized coordinates.

Trying it out

The default parameter settings (which are set in script.cfg explained below) attempt to detect yellow-green objects. Present an object to the JeVois camera and see whether it is detected. When detected and good enough according to a number of quality control tests, the outline of the object is drawn.

For further use of this module, you may want to check out the following tutorials:

- Using the sample FIRST Robotics vision module

- Tuning the color-based object tracker using a python graphical interface

- Making a motorized pan-tilt head for JeVois and tracking objects

- Tutorial on how to write Arduino code that interacts with JeVois

Tuning

You need to provide the exact width and height of your physical shape to parameter objsize for this module to work. It will look for a shape of that physical size (though at any distance and orientation from the camera). Be sure you edit script.cfg and set the parameter objsize in there to the true measured physical size of your shape.

You should adjust parameters hcue, scue, and vcue to isolate the range of Hue, Saturation, and Value (respectively) that correspond to the objects you want to detect. Note that there is a script.cfg file in this module's directory that provides a range tuned to a light yellow-green object, as shown in the demo screenshot.

Tuning the parameters is best done interactively by connecting to your JeVois camera while it is looking at some object of the desired color. Once you have achieved a tuning, you may want to set the hcue, scue, and vcue parameters in your script.cfg file for this module on the microSD card (see below).

Typically, you would start by narrowing down on the hue, then the value, and finally the saturation. Make sure you also move your camera around and show it typical background clutter so check for false positives (detections of things which you are not interested, which can happen if your ranges are too wide).

Config file

JeVois allows you to store parameter settings and commands in a file named script.cfg stored in the directory of a module. The file script.cfg may contain any sequence of commands as you would type them interactively in the JeVois command-line interface. For the FirstVision module, a default script is provided that sets the camera to manual color, gain, and exposure mode (for more reliable color values), and other example parameter values.

The script.cfg file for FirstVision is stored on your microSD at JEVOIS:/modules/JeVois/FirstVision/script.cfg

| Parameter | Type | Description | Default | Valid Values |

|---|---|---|---|---|

| (FirstVision) hcue | unsigned char | Initial cue for target hue (0=red/do not use because of wraparound, 30=yellow, 45=light green, 60=green, 75=green cyan, 90=cyan, 105=light blue, 120=blue, 135=purple, 150=pink) | 45 | jevois::Range<unsigned char>(0, 179) |

| (FirstVision) scue | unsigned char | Initial cue for target saturation lower bound | 50 | - |

| (FirstVision) vcue | unsigned char | Initial cue for target value (brightness) lower bound | 200 | - |

| (FirstVision) maxnumobj | size_t | Max number of objects to declare a clean image. If more blobs are detected in a frame, we skip that frame before we even try to analyze shapes of the blobs | 100 | - |

| (FirstVision) hullarea | jevois::Range<unsigned int> | Range of object area (in pixels) to track. Use this if you want to skip shape analysis of very large or very small blobs | jevois::Range<unsigned int>(20*20, 300*300) | - |

| (FirstVision) hullfill | int | Max fill ratio of the convex hull (percent). Lower values mean your shape occupies a smaller fraction of its convex hull. This parameter sets an upper bound, fuller shapes will be rejected. | 50 | jevois::Range<int>(1, 100) |

| (FirstVision) erodesize | size_t | Erosion structuring element size (pixels), or 0 for no erosion | 2 | - |

| (FirstVision) dilatesize | size_t | Dilation structuring element size (pixels), or 0 for no dilation | 4 | - |

| (FirstVision) epsilon | double | Shape smoothing factor (higher for smoother). Shape smoothing is applied to remove small contour defects before the shape is analyzed. | 0.015 | jevois::Range<double>(0.001, 0.999) |

| (FirstVision) debug | bool | Show contours of all object candidates if true | false | - |

| (FirstVision) threads | size_t | Number of parallel vision processing threads. Thread 0 uses the HSV values provided by user parameters; thread 1 broadens that fixed range a bit; threads 2-3 use a narrow and broader learned HSV window over time | 4 | jevois::Range<size_t>(2, 4) |

| (FirstVision) showthread | size_t | Thread number that is used to display HSV-thresholded image | 0 | jevois::Range<size_t>(0, 3) |

| (FirstVision) ethresh | double | Shape error threshold (lower is stricter for exact shape) | 900.0 | jevois::Range<double>(0.01, 1000.0) |

| (FirstVision) dopose | bool | Compute (and show) 6D object pose, requires a valid camera calibration. When dopose is true, 3D serial messages are sent out, otherwise 2D serial messages. | true | - |

| (FirstVision) iou | double | Intersection-over-union ratio over which duplicates are eliminated | 0.3 | jevois::Range<double>(0.01, 0.99) |

| (FirstVision) objsize | cv::Size_<float> | Object size (in meters) | cv::Size_<float>(0.28F, 0.175F) | - |

| (FirstVision) margin | size_t | Margin from from frame borders (pixels). If any corner of a detected shape gets closer than the margin to the frame borders, the shape will be rejected. This is to avoid possibly bogus 6D pose estimation when the shape starts getting truncated as it partially exits the camera's field of view. | 5 | - |

| (Kalman1D) usevel | bool | Use velocity tracking, in addition to position | false | - |

| (Kalman1D) procnoise | float | Process noise standard deviation | 0.003F | - |

| (Kalman1D) measnoise | float | Measurement noise standard deviation | 0.05F | - |

| (Kalman1D) postnoise | float | A posteriori error estimate standard deviation | 0.3F | - |

script.cfg file# Demo configuration script for FirstVision module. ################################################################################################################### # Essential parameters: ################################################################################################################### # Detect a light green target initially (will adapt over time): # # hcue: 0=red/do not use because of wraparound, 30=yellow, 45=light green, 60=green, 75=green cyan, 90=cyan, 105=light # blue, 120=blue, 135=purple, 150=pink # scue: 0 for unsaturated (whitish discolored object) to 255 for fully saturated (solid color) # vcue: 0 for dark to 255 for maximally bright setpar hcue 45 setpar scue 50 setpar vcue 200 # IMPORTANT: Set true width and height in meters of the U shaped-object we wish to detect: # THIS IS REQUIRED FOR THE 6D POSE ESTIMATION TO WORK. # In our lab, we had a U shape that is 28cm wide by 17.5cm high (outer convex hull dimensions) setpar objsize 0.280 0.175 # Set camera to fixed color balance, gain, and exposure, so that we get more reliable colors than we would obtain under # automatic mode: setcam presetwb 0 setcam autowb 0 setcam autogain 0 setcam autoexp 0 setcam redbal 110 setcam bluebal 170 setcam gain 16 setcam absexp 500 # Send info log messages to None, Hard, or USB serial port - useful for debugging: #setpar serlog None #setpar serlog Hard #setpar serlog USB # Send serial strings with detected objects to None, Hard, or USB serial port: #setpar serout None #setpar serout Hard #setpar serout USB # Compute (and show) 6D object pose, requires a valid camera calibration. When dopose is true, 3D serial messages are # sent out, otherwise 2D serial messages: setpar dopose true # Get detailed target info in our serial messages: setpar serstyle Detail setpar serprec 3 ################################################################################################################### # Parameters you can play with to optimize operation: ################################################################################################################### # Some tuning of our Kalman filters (used for learning over time): setpar procnoise 5.0 setpar measnoise 20.0 setpar postnoise 5.0 setpar usevel false # Max number of blobs in the video frame. If more blobs are detected in a frame, we skip that frame before we even try # to analyze shapes of the blobs: setpar maxnumobj 100 # Range of object area (in pixels) to track setpar hullarea 400 ... 90000 # Max fill ratio of the convex hull (percent). Lower values mean your shape occupies a smaller fraction of its convex # hull. This parameter sets an upper bound, fuller shapes will be rejected: setpar hullfill 50 # Erosion structuring element size (pixels), or 0 for no erosion: setpar erodesize 2 # Dilation structuring element size (pixels), or 0 for no dilation: setpar dilatesize 4 # Shape smoothing factor (higher for smoother). Shape smoothing is applied to remove small contour defects before the # shape is analyzed: setpar epsilon 0.015 # Show contours of all object candidates if true: setpar debug false # Number of parallel vision processing threads. Thread 0 uses the HSV values provided by user parameters; thread 1 # broadens that fixed range a bit; threads 2-3 use a narrow and broader learned HSV window over time: setpar threads 4 # Thread number that is used to display HSV-thresholded image: setpar showthread 0 # Shape error threshold (lower is stricter for exact shape): setpar ethresh 900 # Intersection-over-union ratio over which duplicates are eliminated: setpar iou 0.3 # Margin from from frame borders (pixels). If any corner of a detected shape gets closer than the margin to the frame # borders, the shape will be rejected. This is to avoid possibly bogus 6D pose estimation when the shape starts getting # truncated as it partially exits the camera's field of view: setpar margin 5 |

| Detailed docs: | FirstVision |

|---|---|

| Copyright: | Copyright (C) 2017 by Laurent Itti, iLab and the University of Southern California |

| License: | GPL v3 |

| Distribution: | Unrestricted |

| Restrictions: | None |

| Support URL: | http://jevois.org/doc |

| Other URL: | http://iLab.usc.edu |

| Address: | University of Southern California, HNB-07A, 3641 Watt Way, Los Angeles, CA 90089-2520, USA |